Understanding the Tools

In order to understand Copilot's performance on bias detection, suggestion of inclusive language and ability to produce output in line with the above-mentioned laws, we compared it to Witty.

What is Microsoft Copilot?

Microsoft Copilot

is an AI-powered assistant designed to integrate

with Microsoft’s suite, including Word and Outlook.

Copilot can assist with various tasks, from drafting

text to providing stylistic improvements.

Its primary purpose is to support general

productivity.

What is Witty?

Witty

is an AI-based tool focused on detecting bias in

language. It identifies biased terms across more

than 50 diversity dimensions, including gender,

race, age, mental health, and more. Witty

provides suggestions for bias-free alternatives,

keeping companies out of legal trouble stemming from

anti-discrimination laws or AI Acts.

Witty is trained to detect several thousand biased terms and phrases in English, German, and French. The vocabulary is built on studies, input from specialized linguists, and associations related to the social movements of Black Lives Matter, #meToo, LGBTQIA+, people with disability, etc.

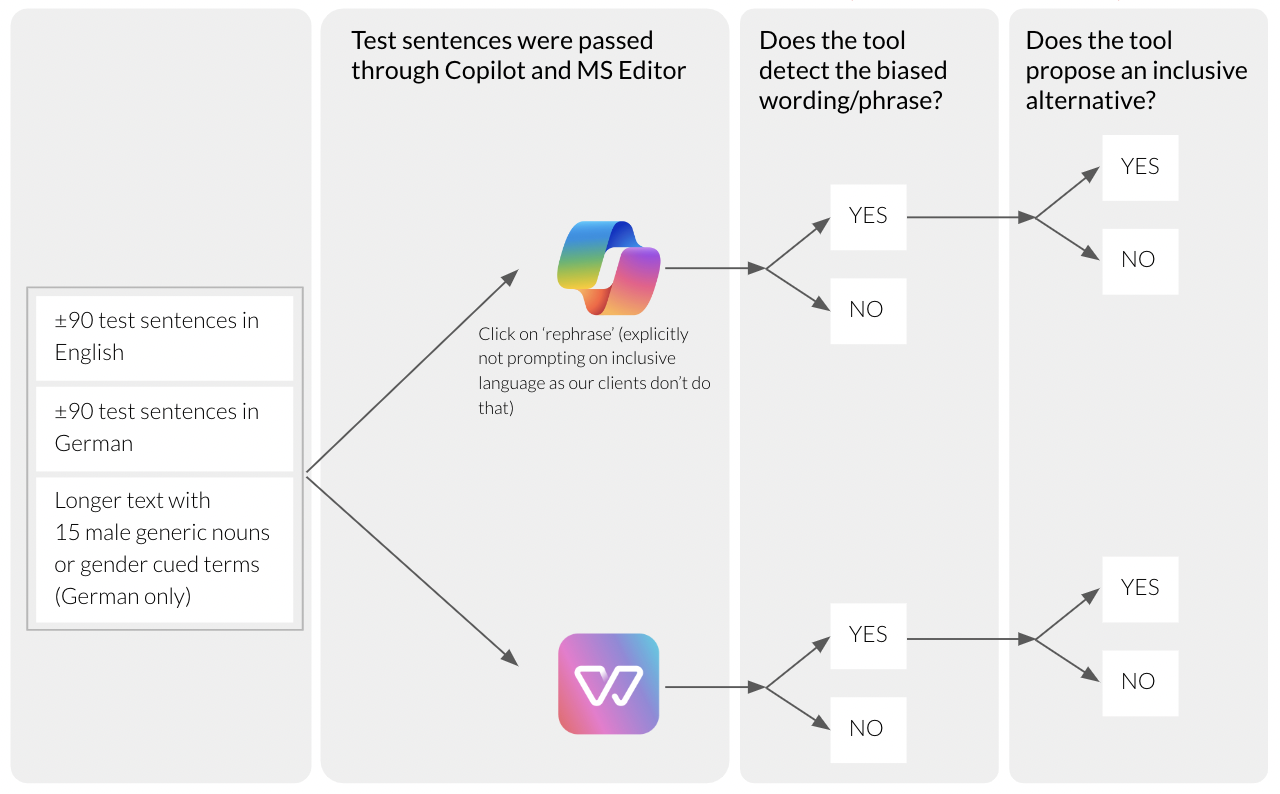

We did two different studies comparing Copilot and Witty.

Study 1: Imaginary test sentences

In this study, we compared imaginary test sentences, which we tested on three main fronts:

- Diversity Dimensions: We tested how each tool detected bias across various diversity dimensions. For each of these terms, we created test sentences in English and German that could occur in a business setting.

- Handling Male-Generic Language: We examined how each tool approached gendered language in German. In today’s business world in Germany, it is highly supported that organizations use gender-neutral language. This can mean special gender-neutral forms, using both male and female forms or using a gender sign (like a star) to also include non-binary people.

-

Agentic Language:

Agentic language is a language that has a

connotation of competition, performance, and

pressure. Studies from

Harvard

and the

Technical University of Munich

have shown that it particularly deters female

talent when it is used in job descriptions or

employer branding content. In Witty Works' own

studies with GenerationZ, it was also shown that

this age group does not feel attracted by agentic

language either. Witty takes particular care to

detect agentic language, given that for many

organizations and industries it is hard to attract

female talent or GenZ.

We chose 30 terms of the diversity dimension agentic language. We use test sentences (in English and German) for each term that could occur in a business setting.

For Copilot, we entered the sample phrase in an imaginary document. In that very document, we clicked on the Copilot icon and then clicked on “rephrase”. Copilot then rephrased the sentence.

We did not specifically prompt the Copilot chat (which is within the side bar) to write the text inclusively. Why not? Observing our own customers when writing, we saw that they use the chat with a specific prompt ('write me a social media post on XYZ'), but then would never add a specification for inclusive language (like 'write this text inclusively'), falsly assuming that Copilot does formulate inclusively from the get-go.

For Witty, we pasted the sentence into our Editor, where it was automatically analyzed by Witty.

In each test case, we evaluated two main criteria:

- Did the tool detect the biased wording or phrase?

- Did the tool suggest an inclusive alternative?

Let’s look at the three aspects we tested and examples for each. If you’re only interested in the quantitative results, feel free to skip ahead here.

1. Detecting Bias Across Diversity Dimensions

A primary distinction between Witty and Copilot lies in the depth of diversity coverage. Witty’s AI is specifically trained across more than 50 diversity dimensions, ensuring that even subtle biases are flagged.

Example Sentence

“The competitor’s company was fined for employing illegal immigrants in their factories."

- Copilot: May detect the term but often lacks nuanced replacements, frequently retaining “illegal immigrants,” a term that can be perceived as biased.

- Witty: Flags “illegal immigrants” and suggests neutral alternatives, such as “undocumented workers” or “migrant workers,” which are more inclusive and respectful.

This ability to detect a range of biased terms and provide appropriate alternatives is one of Witty’s defining strengths. Copilot on the other hand focuses more on stylistic adjustments. In many cases cannot detect the bias. If it does so, it is not always able to replace it with inclusive wording. This can get a company into legal trouble with regard to anti-discrimination laws. Or if Copilot is used in HR context also with regard to the EU AI Act and the US AI Directives.

2. Addressing Gendered Language and the Male Generic

In gendered languages like German, using male-generic terms is a common concern. Organizations increasingly prefer inclusive forms in business contexts, using non-gendered language where possible. The tools’ performance in this area revealed a significant gap.

Example Sentence

Der Arbeiter hat alle Aufgaben erledigt. (“The worker completed all tasks.”)

- Copilot: Struggles to detect and revise male-generic terms, which limits its effectiveness in formulating inclusive language in German.

- Witty: Flags “Arbeiter” as male-generic and proposes inclusive alternatives like “Arbeitende” (workers), which accommodates all genders.

Copilot’s limited functionality in addressing male-generic language highlights a structural limitation, as it lacks the configurability for gender neutrality that Witty offers. This can get a company into legal trouble with regard to anti-discrimination laws. Or if Copilot is used in HR context also with regard to the EU AI Act and the US AI Directives.

3. Identifying and Revising Agentic Language

Agentic language—terms with connotations of competition and assertiveness—can unintentionally exclude certain groups, especially in recruitment. The tools’ responses to agentic language demonstrated further differences.

Example Sentence

We’re looking for a candidate who can dominate in their field and drive results.

- Copilot: Often leaves agentic terms unchanged, as its primary focus is not on refining language to avoid such connotations.

- Witty: Detects agentic terms like “dominate” and suggests alternatives such as “collaborate effectively” or “lead with impact,” which are less likely to deter candidates who may not resonate with competitive language.

For a tool like Copilot that is trained with human created text that carries all the unconscious biases we have as humans, it is impossible to detect such deep biases. And thus is also unable to propose inclusive language.

This sensitivity to agentic language is part of Witty’s key strength, supporting organizations to use language that attracts and retains all profiles of talents.

Quantitative Results

Lets know have a look at the detailed results. What did we test?

|

|

English |

German |

|

Number of diversity dimensions tested |

44 |

46 |

|

Number of non-inclusive terms tested |

87 |

92 |

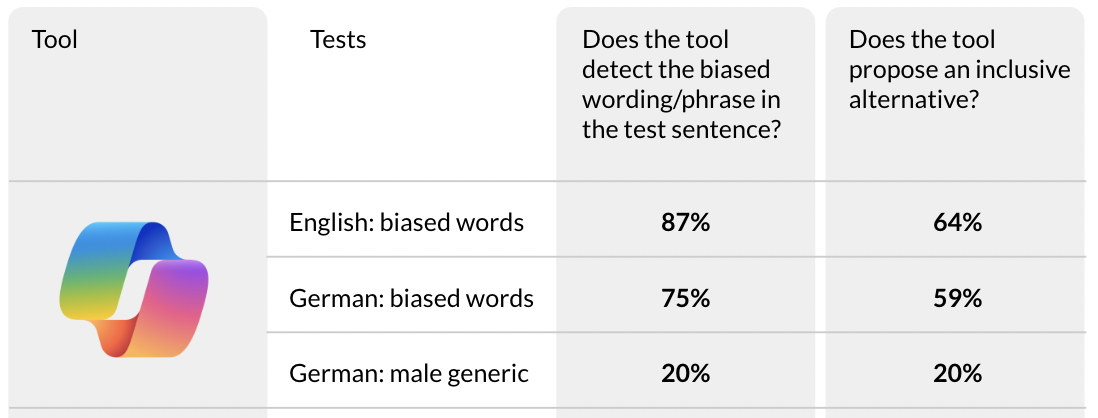

The study’s quantitative results also illustrated differences between the tools:

- Bias Detection Rate: Witty detected biased terms with 87% accuracy in English and 75% in German. In comparison, Copilot achieved 64% in English and 59% in German.

- Inclusive Alternative Suggestions: Witty consistently offered context-appropriate, inclusive suggestions, whereas Copilot’s suggestions were more limited and retained bias.

These findings suggest that, while Copilot can assist with general stylistic changes, its detection and handling of bias fall short compared to Witty’s targeted approach. The lack of reliability of such an elaborated tool when it comes to language is a particular risk to companies in today's diverse world where organizations are under constant scrutiny. Especially in gendered languages, Copilot cannot be of any help to make texts bias-free and more inclusive.

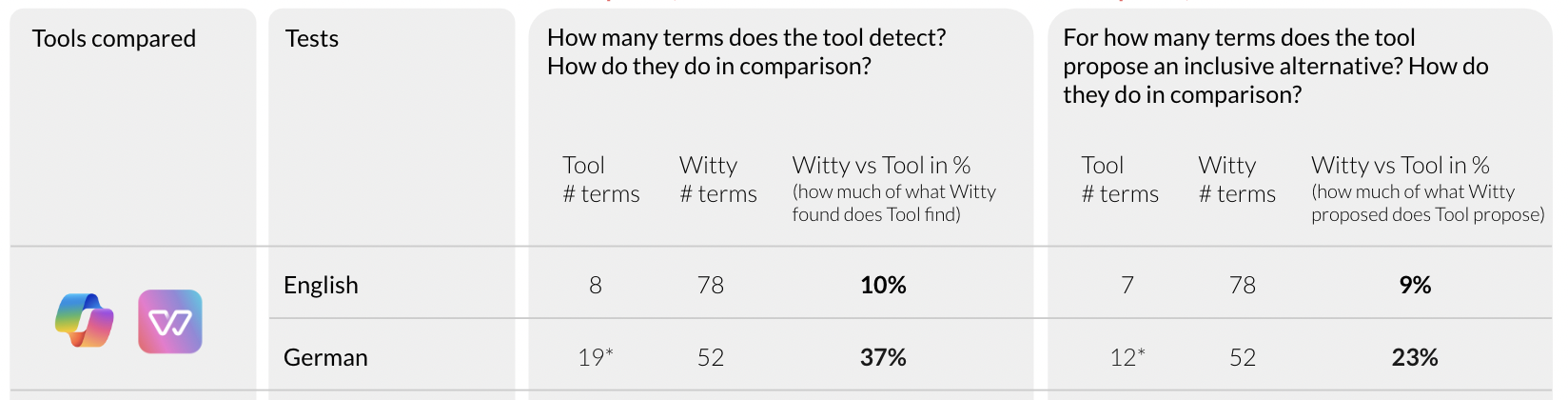

Study 2: Real third-party texts

In our second study, we analyzed five real-world texts from well-known companies, such as Amazon’s LinkedIn About Page, Fiege Logistics’ About page, Kühne & Nagel’s website, a Google employee's social media post, and a Patagonia job advertisement.

The table below compares how well Microsoft Copilot and Witty detected biased terms and suggested inclusive alternatives. The percentages shown for Copilot indicate how much of what Witty identified was also detected by Copilot. In English, Copilot identified only 10% of the terms flagged by Witty and provided inclusive alternatives for just 9% of the terms where Witty suggested changes. In German, Copilot performed somewhat better, identifying 37% of the terms Witty detected and offering alternatives for 23%.

These results underscore Witty’s much higher accuracy in both identifying biased language and suggesting inclusive wording across English and German, making it a stronger choice for organizations aiming for inclusive and unbiased communication.

Conclusion

In summary, Microsoft Copilot and Witty serve different purposes in assisting communication. Copilot excels in enhancing productivity and streamlining tasks, yet its bias detection and inclusive language suggestions are less comprehensive. Witty, by contrast, is explicitly designed to address inclusion, offering in-depth bias detection and reliable, culturally sensitive alternatives. The inability of Copilot is not a minor flaw, but can have severe consequences with regard to laws in effect like the EU AI Act, the US AI Directives as well as anti-discrimination laws in both regions. Texts produced by Copilot that contain bias and going out to the public can cost a company legal fees and reputation, both costs that can be prevented when working with the right tools.

For organizations who strive to use Copilot for productivity, they are well advised to use Witty's layer of precision on bias detection and output of inclusive language. unbiased language across varied contexts.

As a result of this study, we developed a proof of concept that integrates Witty directly into Microsoft Copilot. This prototype combines the strengths of both tools—the inclusive language capabilities of Witty with the productivity of Copilot. In this setup, any prompt given to Copilot is first rephrased inclusively by Witty, and Witty then reviews Copilot’s response, refining it for inclusive language.

Witty ❤️ Microsoft Copilot

The Witty-Copilot Integration offers the best of both worlds. Contact us, and we’ll happily show you the Witty-Copilot integration in action.